| Your Phone or Mine? Fusing Body, Touch and Device |

| Written by Kay Ewbank | |||

| Thursday, 31 May 2012 | |||

|

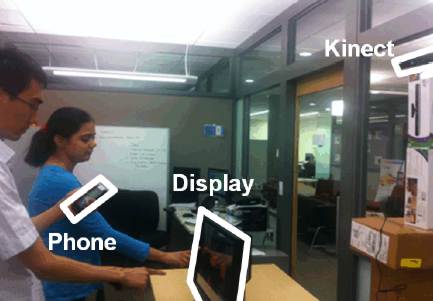

Another novel use for the Kinect. It can work out which of multiple users is interacting with a multi-user interactive How can you work out who’s touching a multi-user interactive touch display? It’s all very well writing apps that users can interact with by touching, but at the moment there’s no way your app can automatically tell who’s doing the touching if you allow multiple people to touch the screen. Researchers at Microsoft Research have published a paper suggesting a way that you could work out who it is.

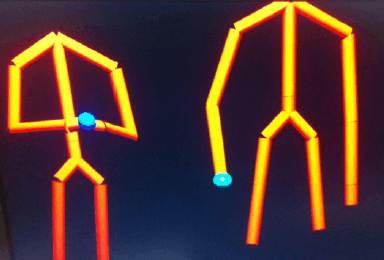

The method makes use of data from a smartphone’s on-board sensors together with camera-based body tracking. ShakeID can work out who’s touching the screen while holding their mobile phone. So long as each user is holding a smartphone or other portable device that can sense its own movement, ShakeID matches the motion sensed by the device to motion observed by a Microsoft Kinect camera pointed at the users standing in front of the touch display. By comparing the movements of each phone in the scene with the movements of each user, the system can associate each phone to a specific user’s hand. It then transforms the 2D space of the display to the 3D camera space, and associates the touches on the display to specific users. This means the app can work out which users are doing the touching, and which devices they’re holding. The research paper gives the example of two users touching a display simultaneously in different locations to grab content, and ShakeID being able to associate each touch to a specific user and transfer the correct content to each user’s personal device. By using Kinect tracking data, the technique can also work out which hand is holding the device, and can detect when users move away from the screen and log the user out from the session automatically.

In the tests carried out of the technique, the users were told that phone movement was being used to associate devices with people and if they experienced any problems with the system not recognising their touch, they could shake their phone to improve the accuracy - what the researchers call ‘shake to associate’. The current work matches the phone to the users’ hands, but the researchers suggest the technique may work when matching to body parts other than hands. They suggest it may be possible to successfully associate a device to a user if it is in the user’s pants pocket by matching to users’ hip joints data from the Kinect SDK. Or putting it another way, wiggle your hips to associate. Not a suggestion often heard in software development, but it may just catch on. More InformationRelated ArticlesKintinuous - Kinect Creates Full 3D Models Kinect SDK 1.5 - Now With Face Tracking LightGuide: Kinect Teaches Movement Kinect 3D Full Body "Hologram"

To be informed about new articles on I Programmer, install the I Programmer Toolbar, subscribe to the RSS feed, follow us on, Twitter, Facebook, Google+ or Linkedin, or sign up for our weekly newsletter.

Comments

or email your comment to: comments@i-programmer.info |

|||

| Last Updated ( Friday, 01 June 2012 ) |