| Bitesnap - Applying Deep Learning to Calorie Counting |

| Written by Nikos Vaggalis | |||

| Wednesday, 15 February 2017 | |||

|

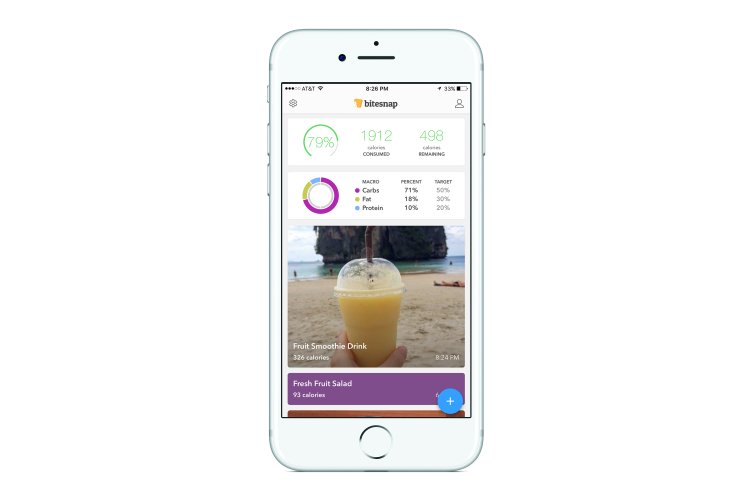

Bitesnap is a recently launched mobile app that uses photo recognition to help you control your calorie intake. To learn more, we interviewed Keith Ito, one of the app's core developers to discuss Bitesnap's revolutionary vision for the food sector as well as the technological infrastructure behind it. We all know that a healthy diet is very difficult to maintain, especially nowadays where work is mostly done while sitting and entertainment is a matter of staring at screens of all shapes and sizes. The problem is made worse by the plethora of tasty but unhealthy food in existence. Whereas dietary usually try to impose radical change, Bitesnap in contrast works with what you normally eat, trying to keep it or even turn it healthy, by closely monitoring food intake on a daily basis.Not sure how many calories the meal you are about to eat is? Take a picture of it and let Bitesnap reveal the calories and nutrients behind it, an action that allows you to make quick dietary decisions and keep a complete and comprehensive diary of the food you eat. That's the concept. For Bitesnap to do its magic, it takes advantage of the latest technological developments in Computer Vision and Artificial Intelligence and its operation is based on a mobile application development stack.

NV: Keith, take us through the concept behind the app.What motivated you in building it? KI: Everyone wants to eat more healthily, and studies have shown that keeping a food journal is a great way to lose weight and improve your health. But with existing tools, it can be difficult. It's time consuming, not very engaging, and generally feels like a chore. In recent years, it's become possible to use deep learning to recognize objects in images with high accuracy. We realized that we could apply this to the food logging problem to simplify the process while also producing a log of beautiful, engaging food photos that you can revisit to get a better picture of what you're eating. These days, a lot of people are taking pictures of their food already -- why not get additional information on calories and nutrients to help make better decisions? NV: The product information says: "with Bitesnap, you can log a meal just by taking a picture and confirming a few details." What sort of details are we talking about? KI: Eventually, we plan on getting to the point where you can just take a picture and we'll figure out the rest. In this first version, we’re able to recognize what the food is, but you still need to confirm or adjust the portion size, and sometimes you need to adjust how the food was prepared as well. We've done a lot of work to narrow down the options so you can make these adjustments with minimal effort. Over time, as we collect more data, we'll learn to make these adjustments automatically. NV: From the user's point of view, what happens after you take a picture of your meal? What is the user presented with and what can he do with that data? Does the app also process it in some way, say, to offer dietary advice? KI: After taking a picture, one of two things might happen. If we recognize it as a meal you've eaten before, we'll offer you the option of copying the meal with just one tap. If not, we'll show you a list of foods that were recognized in the image. You can select items from this list and adjust their portion sizes if necessary. We show you a running tally of calories in the meal, and once you're done selecting items, the meal is added to your feed. The feed lets you easily look back to see what you've been eating. We keep track of calories and nutrients on a daily and weekly basis and let you see how you’re doing relative to your targets. Eventually, we plan on adding recommendations on what you should eat next based on your dietary needs and preferences.

NV: How are Computer Vision and Artificial Intelligence utilized in the app, and what about the underlying network's success rate (i.e when scanning salads where by definition the ingredients aren't easy to tell apart) ? KI: We use computer vision to recognize what you're eating.We're doing image recognition using convolutional neural networks. We take a large number of images that are labeled with the foods in them, and the network learns weights for its edges that minimize the error in the labels it predicts. In terms of accuracy, this ends up being highly dependent on training data. With enough examples, the network can get good at making even subtle distinctions, like between different types of lettuce that may be in a salad. We're constantly working on gathering and cleaning more data so we can improve our model. NV: What approach is used to calculate calories, because recognizing the ingredient is the first parameter to the equation, the other is its amount. How does Bitesnap handle it? KI: When computing calories and nutrients, the important questions to answer are: "What is it?", "How was it prepared?" and "How much is it?" Right now, computer vision can take care of first question and, in many cases, the second question. It's currently up to the user help us out with the third question. Eventually, we plan on getting to the point where we can answer all three questions automatically. When you take a picture of a meal that you've eaten before, we can usually match it directly to a meal in your history. In that case, you just need to press "OK", and we'll copy the whole thing, including preparation and portion size. This is sort of a preview of what we think the experience will be like for all meals eventually.

NV: What is the toolchain used to built the AI part? Are you plugging into a cloud service like IBM Bluemix or using a solution built with, for example, Tensorflow? KI: We have a custom solution, written in Python. We benefit from the great ecosystem of open-source machine learning tools that are available in Python. We use a mix of OpenCV, scikit-learn, Theano and Keras. NV: On the mobile application development technology stack, what did you decide to go for and why? And how do you cater for both Ios and Android, are there different code bases or is there a common one for both platforms? KI: We're using Python on the backend. It makes sense because all of our machine learning code is in Python. We run a PostgreSQL database, and everything is served out of AWS. We like to keep things simple. The app uses React Native, which lets you write code in Javascript and run it on both Android and iOS. We're a small team, so having a single codebase is a big win. Occasionally, we have to write a bit of native code, but this is surprisingly rare, and React Native's bridging support makes it easy to do. KI: We believe there's a lot of value in helping people understand their dietary needs and preferences and in connecting them with sources of healthy food that they'll enjoy. Our priority will always be our users. We want to enable them to gain insight into what they're eating and help them achieve their dietary goals; any monetization we add in the future will fit in with that. NV: After spending much time developing the app, making the end result known to the public is of prime priority. How easy is to do that and how to go about it? KI: What we’ve released so far is really just the beginning in terms of where we think this technology can go. It’s by no means an end result. We're hard at work taking the first round of feedback that we've gotten and making improvements and adding features that people have asked for. Getting the word out is always a big challenge. We have a few ideas for marketing and distribution that we think will be really fun. Stay tuned! NV: Why is that today Bitesnap is only available in US or Canada? Are you looking to expand into more countries, and if yes, how would you localize it to cater for different cultures with different cuisines.

KI: Initially, we're being a bit cautious in expanding because foods and preparation methods can vary a lot from country to country. We want to ensure that users outside the US and Canada will have a good experience before launching to them. There are also some interesting localization issues. For example, we only support imperial units for portion size right now, and we'll need to support metric in most places. We'd like to support more countries as soon as we can. If you're outside the US, we're running a beta that you can sign up for here. NV: Finally, how do you think is technology affecting the food sector? What are your plans in the foreseeable future, (like what functionality are you looking to add into a future version) and what do you expect to be the deciding factor in Bitesnap's successful and widespread public adoption? KI:Technology is transforming the way we think about health and wellness. We've already seen a huge shift in how people track their activity and exercise using things like Fitbits and smartphones -- these technologies have dramatically reduced the amount of effort it takes to log and analyze how you’re burning calories. We're hoping to bring something similar to the other side of the equation: tracking the foods that you’re putting into your body. We’ve taken the first steps toward that goal, but there’s still a lot of work to do. We believe that as food logging becomes easier and faster, it will appeal to a broader segment of people, and this will drive widespread adoption. More InformationBitesnap-The easier way to track what you eat Related ArticlesTo be informed about new articles on I Programmer, sign up for our weekly newsletter, subscribe to the RSS feed and follow us on Facebook or Linkedin.

Comments

or email your comment to: comments@i-programmer.info |

|||

| Last Updated ( Wednesday, 15 February 2017 ) |